Officially known as Apache Hadoop, the software is developed as part of an open source undertaking within the Apache software program basis. a couple of carriers offer business Hadoop distributions, despite the fact that the variety of Hadoop vendors has declined because of an overcrowded marketplace after which aggressive pressures driven through the accelerated deployment of large records systems inside the cloud.

The shift to the cloud also permits users to save facts in lower-cost cloud object garage services in place of Hadoop’s namesake document device; as a result, Hadoop’s function is being decreased in some big data architectures.

What is hadoop?

Hadoop is an Public source processing framework that manages information processing and storage for large data packages in scalable clusters of pc servers. it is at the middle of an surroundings of big information technologies which might be on the whole used to aid superior analytics tasks, together with predictive analytics, data mining and gadget getting to know.

Hadoop structures can cope with diverse types of dependent and unstructured data, giving users more flexibility for gathering, processing, studying and managing facts than relational databases and information warehouses offer.

Hadoop’s capacity to technique and shop special types of information makes it a especially desirable in shape for huge statistics environments. They generally involve no longer only massive quantities of facts.

But also a mix of established transaction statistics and semi structured and unstructured facts, which includes net clickstream statistics, internet server and cell software logs, social media posts, customer emails and sensor facts from the internet of factors.

HISTORY OF HADOOP

Scientists Doug slicing and Mike Cafarella created Hapoop, to start with to support processing inside the Nutch open source seek engine and net crawler. After Google posted technical papers detailing its Google record gadget and MapReduce programming framework, cutting and Cafarella changed in advance technology plans and evolved a Java-based MapReduce implementation and a record system modeled on Google’s.

In the beginning of 2006, the elements were split off from Nutch and became a separate Apache subproject, which reducing named Hadoop after his son’s crammed elephant. at the same time, reducing changed into hired by way of net offerings employer Yahoo, which have become the first manufacturing consumer of Hadoop later in 2006.

Use of the framework grew over the following few years, and three unbiased Hadoop vendors have been based: Cloudera in 2008, 12 months later and Hortonworks as a Yahoo derivative in 2011. similarly, AWS launched a Hadoop cloud carrier known as Elastic MapReduce in 2009. That turned into all earlier than Apache released Hadoop 1.0. which have become available in December 2011 after a succession of releases.

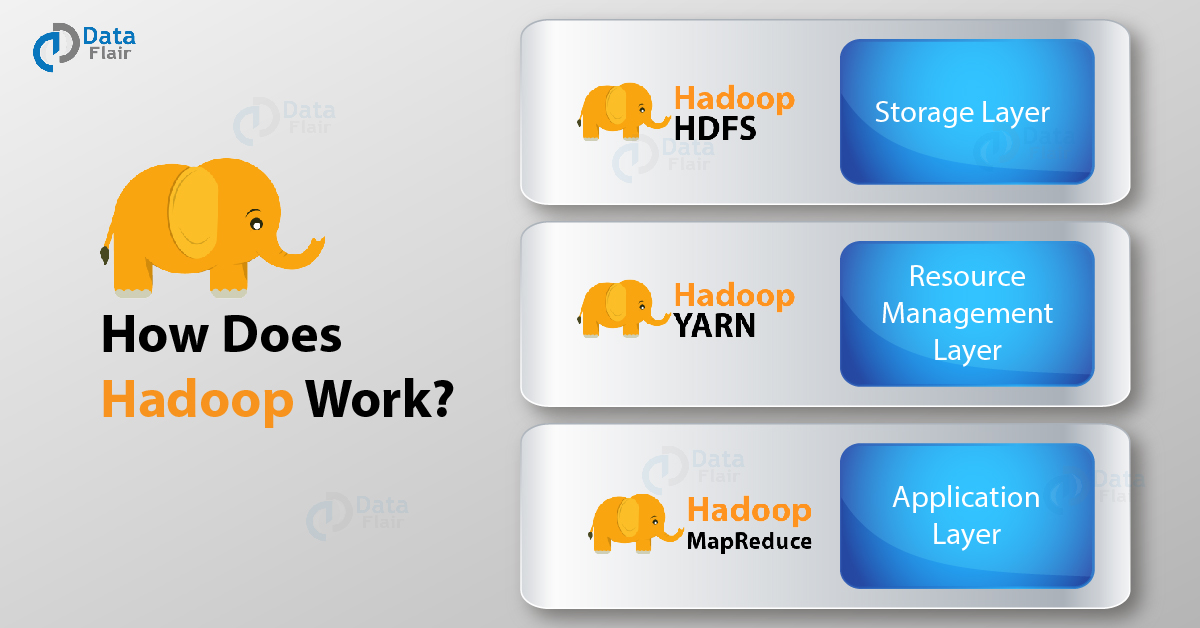

Main components of Hadoop

Hadoop isn’t just one utility, as a substitute it’s far a platform with numerous crucial components that permit distributed information garage and processing. these additives collectively shape the Hadoop ecosystem.

A number of these are center additives, which form the muse of the framework, even as some are supplementary additives that deliver upload-on functionalities into the Hadoop global.

1. YARN

YARN refers to Yet Another Resource Negotiator. It manages and schedules the assets, and decides what must take place in each data node. The primary grasp node that manages all processing requests is called the resource manager. The useful resource manager interacts with Node Managers; every slave datanode has its personal Node manager to execute obligations.

2. HDFS

HDFS is the main key component of Hadoop that keeps the dispensed file machine. It makes it feasible to shop and reflect statistics throughout multiple servers.

HDFS has a Name Node and Data Node. Data Nodes are the commodity servers wherein the information is simply saved. The Name Node, however, consists of metadata with records at the statistics stored in the unique nodes. The application best interacts with the Name Node, which communicates with statistics nodes as required.

3. Map Reduce

MapReduce is a programming model that changed into first utilized by Google for indexing its seek operations. it is a common logical thing used to break up information into smaller sets. it works on the premise of two functions Map and reduce that parse the facts in a brief and green manner.

First, the Map characteristic agencies, filters, and sorts a couple of facts sets in parallel to produce tuples then, the reduce function aggregates the information from these tuples to provide the desired output.

Other components of Hadoop

there are relatively common components in hadoop system. lets take a look in a few of them.

1. Flume

Flume is a large statistics ingestion device that acts as a courier carrier among more than one records sources and the HDFS. It collects, aggregates, and sends massive quantities of streaming data generated by using packages inclusive of social media web sites, iot apps, and ecommerce portals into the HDFS.

- Has a diverse structure

- Has the searchability to acquire data in real-time.

- Is fault-tolerant.

- Ensures reliable data transfer.

information resources speak with Flume sellers each agent has a supply, channel, and a sink. The source collects facts from the sender, the channel briefly shops the information, and sooner or later, the sink transfers records to the destination, which is a Hadoop server.apps, and ecommerce portals into the HDFS.

2. Sqoop

sqoop is some other service tool like Flume. while Flume works on unstructured or semi-established statistics, Sqoop is used to export records from and import records into relational databases. As maximum agency information is stored in relational databases, Sqoop is used to import that records into Hadoop for analysts to study.

Database admins and builders can use a simple command line interface to export and import information. Sqoop converts those instructions to MapReduce layout and sends them to the HDFS the use of YARN. Sqoop is likewise fault-tolerant and plays concurrent operations like Flume.

3. HIVE

Hive is a facts warehousing machine that helps to question large datasets in the HDFS. earlier than Hive, builders have been faced with the undertaking of creating complex MapReduce jobs to question the Hadoop records. Hive makes use of HQL (Hive question Language), which resembles the syntax of sq.. since most developers come from a sq. historical past, Hive is less difficult to get on-board.

The advantage of Hive is a motive force acts as an interface among the application and the HDFS. It exposes the Hadoop file device as tables, converts HQL into MapReduce jobs, and vice-versa. So while the developers and database administrators advantage the benefit of batch processing large datasets, they can use easy, familiar queries to attain that. at the start evolved through the facebook crew, Hive is now an open source generation.

4. Hbase

HBase is a non-relational storage type that sits on top of HDFS. one of the challenges with HDFS is that it is able to only do batch processing. So for easy interactive queries, facts nonetheless has to be processed in batches, main to excessive latency.

HBase solves this mission by permitting queries for single rows throughout massive tables with low latency. It achieves this by way of internally the use of hash tables. it’s miles modelled along the lines of Google BigTable that helps access the Google report gadget (GFS).

HBase is scalable, has failure assist whilst a node goes down, and is ideal with unstructured in addition to semi-structured data. consequently, it is ideal for querying huge statistics shops for analytical purposes.

HOW HADOOP WORKS

A Hadoop works via major structures, HDFS and MapReduce. The HDFS has the subsequent 5 steps.

- HDFS shops the facts utilized by the Hadoop program. It consists of a call node (grasp node) that tracks documents, manages the record device, and contains the metadata and all the information in it.

- The information Node stores the records in blocks in HDFS and is a slave node to the master.

- A Secondary call Node handles the metadata checkpoints of the document machine.

- The activity Tracker gets requests for MapReduce processing from the consumer.

- The assignment Tracker serves as a slave node to the job tracker. It takes the activity and associated code and applies it to the relevant record.

The activity tracker and venture tracker make up the MapReduce engine. every MapReduce engine incorporates one task tracker that receives MapReduce job requests from the user and sends them to the proper mission tracker. The aim is to preserve the assignment at the node closest to the statistics in process.

Future

Hadoop has made its presence felt in a massive manner in the computing industry. that is as it has subsequently made the opportunity of data analytics actual. From reading site visits to fraud detection to banking applications, its packages are numerous.

With Tale end Open Studio for bigdata Now it’s smooth to integrate your Hadoop setup into any data architecture With Tale end Open Studio for bigdata . Talend provides more built-in records connectors than every other records management solution, allowing you to construct seamless records flows between Hadoop and any foremost report layout. Also read this